I just wrote about The Hypocrisy of the Web . In order to check what others think about this issue I posted the following question to Yahoo Answers: is adding fresh content to your site hypocrisy?

In a few minutes I got very interesting answers:

1. Here's the answer that johnwaynenoblepi gave:

Yes and No. Some users are actually getting paid for the traffic that goes to there website as a promotion for some other product. That is thier livelyhood. On the other hand thier are mischiefious individuals out thier with no education that have nothing better to do than to use someone elses program to entice website visitors into clicking on something in their website that has destructive consequences. What comes around, goes around.

John

A+ Certified Professional

2. Here's the answer that serf gave:

Why would it be hypocricy? That doesn't make any sense.

3. Here's the answer that Mmmdlite gave:

People have a right to post whatever ever they want on their personal space. It's not hypocracy to try to generate traffic. This is the same reason why corporations have TV commercials...it's not hypocrytical, it's a way to gain business.

And let's take a look at the definition of hypocrisy:

1. The practice of professing beliefs, feelings, or virtues that one does not hold or possess; falseness.

2. An act or instance of such falseness.

Unless what they are posting goes against what they believe, how could it be hypocricy?

4. Here's the answer that hazel_nut333 gave:

Hypocrisy is if a person advocates Anti-New Contents and went about and add new contents to their site. That's the definition of hypocrisy.

You, for some reason, think people should only add contents to their sites if and only if it for the sole purpose of sharing knowledge.

What a closed minded and idiotiotic way to portray the Internet. IT'S THE FREAKING INTERNET!!!

5. Here's the answer that Jane Furrows gave:

Hypocrisy isn't the right word. As long as they have permission to use those articles, it's okay, especially if they have other articles there that people would be interested in and would help people find what they are looking for. The sites that annoy me are those that just mirror Wikipedia content but with tons of ads all over the place. I always make sure to go straight to the source for those.

I want to thank each of these kind answerers.

I think using Yahoo Answers for such a survey is fun and a very good way to learn something new.

BTW – This is a nice and easy way to add fresh content to your site.

Tuesday, January 31, 2006

Monday, January 30, 2006

Better Yahoo Answers

After I wrote about the need to improve Google answers I checked the first results in Yahoo for the query words "yahoo Answers" and made a comparison between these search results and the new QTsearch-Ranking-Version results.

QTsearch-Ranking-Version picked up the best and most extensive answer out of 5, while on Yahoo the 2 top results out of 1,030,000 were more narrow and restricted and only the third result was satisfactory (the same that QTsearch picked up).

I thought this comparison was quite amazing since Yahoo is the owner of Yahoo Answers and one could expect that the first or second results will be the best.

I think because the current search engines are retrieving so many results (1,030,000 in this case) they are not built to tailor better answers for thier users needs and there is room for QTsearch algorithm to be implemented on any such search engine in order to give users the best answers from the first results-pages that are retrieved.

Following is the comparison itself:

QTsearch best result:

The Birth of Yahoo Answers: http://blog.searchenginewatch.com/blog/051207-220118

Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service. The service allows any registered Yahoo user to ask just about any question and hopefully get an answer from another member of the question answering community. Access to Yahoo Answers is free.

Yahoo Answers appears to be definite extension of what Yahoo's Senior Vice President, Search and Marketplace, Jeff Weiner, calls FUSE (Find, Use, Share, Expand) and Yahoo's numerous efforts into online community building with services like Web 2.0.

At the moment Yahoo Answers offers 23 top-level categories like:

Yahoo Answers uses a point and level system to reward participants:

Looking at the Yahoo Answers point system, it appears to me that there is an incentive to answer as many questions as possible as quickly as possible without worrying about accuracy.I think that's going to need some tuning.

Yahoo Results 1 - 5 of about 1,030,000

1 Yahoo! Answers

a place where people ask each other questions on any topic, and get answers by sharing facts, opinions, and personal experiences.

answers.yahoo.com

2. Yahoo! (Nasdaq: YHOO)

Yahoo! Internet portal provides email, news, shopping, web search, music, fantasy sports, and many other online products and services to consumers and businesses worldwide.

yahoo.com

3. The Birth of Yahoo Answers

You are in the: ClickZ Network ... Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service ... Access to Yahoo Answers is free. Yahoo Answers appears to be definite ...

blog.searchenginewatch.com/blog/051207-220118

QTsearch-Ranking-Version picked up the best and most extensive answer out of 5, while on Yahoo the 2 top results out of 1,030,000 were more narrow and restricted and only the third result was satisfactory (the same that QTsearch picked up).

I thought this comparison was quite amazing since Yahoo is the owner of Yahoo Answers and one could expect that the first or second results will be the best.

I think because the current search engines are retrieving so many results (1,030,000 in this case) they are not built to tailor better answers for thier users needs and there is room for QTsearch algorithm to be implemented on any such search engine in order to give users the best answers from the first results-pages that are retrieved.

Following is the comparison itself:

QTsearch best result:

The Birth of Yahoo Answers: http://blog.searchenginewatch.com/blog/051207-220118

Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service. The service allows any registered Yahoo user to ask just about any question and hopefully get an answer from another member of the question answering community. Access to Yahoo Answers is free.

Yahoo Answers appears to be definite extension of what Yahoo's Senior Vice President, Search and Marketplace, Jeff Weiner, calls FUSE (Find, Use, Share, Expand) and Yahoo's numerous efforts into online community building with services like Web 2.0.

At the moment Yahoo Answers offers 23 top-level categories like:

Yahoo Answers uses a point and level system to reward participants:

Looking at the Yahoo Answers point system, it appears to me that there is an incentive to answer as many questions as possible as quickly as possible without worrying about accuracy.I think that's going to need some tuning.

Yahoo Results 1 - 5 of about 1,030,000

1 Yahoo! Answers

a place where people ask each other questions on any topic, and get answers by sharing facts, opinions, and personal experiences.

answers.yahoo.com

2. Yahoo! (Nasdaq: YHOO)

Yahoo! Internet portal provides email, news, shopping, web search, music, fantasy sports, and many other online products and services to consumers and businesses worldwide.

yahoo.com

3. The Birth of Yahoo Answers

You are in the: ClickZ Network ... Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service ... Access to Yahoo Answers is free. Yahoo Answers appears to be definite ...

blog.searchenginewatch.com/blog/051207-220118

Sunday, January 29, 2006

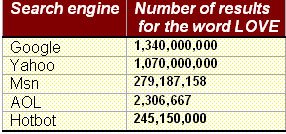

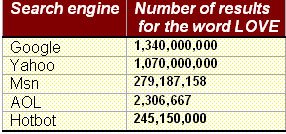

Looking for Love

The expression "Looking for Love" is a homonym - an expression with multiple meanings.

When you're looking for love in the real world you expect to find one

match between your soul and one other soul.

When you're looking for love in the virtual world you expect to find a lot of matches between the word 'love' in your query and the word 'love' in the results.

I hope that one day there will be no such division between the real and the virtual worlds and search engines will give users only one answer – the one that matches their needs!

The same goes for the expression "Looking for God"

When you're looking for love in the real world you expect to find one

match between your soul and one other soul.

When you're looking for love in the virtual world you expect to find a lot of matches between the word 'love' in your query and the word 'love' in the results.

I hope that one day there will be no such division between the real and the virtual worlds and search engines will give users only one answer – the one that matches their needs!

The same goes for the expression "Looking for God"

Saturday, January 28, 2006

Yahoo Answers

I just signed up to Yahoo Answers Beta and posted the following question:

Did Yahoo Answers Beta take the idea "to get answers from real people" from Wondir

(http://www. wondir. com/)?

Then I collected some excerpts with QTsearch in order to understand what Yahoo Answers is all about; and then I checked whether other people commented about the relationship between Yahoo Answers and Wondir .

What is Yahoo Answers all about?

The Birth of Yahoo Answers

http://blog. searchenginewatch. com/blog/051207-220118

Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service. The service allows any registered Yahoo user to ask just about any question and hopefully get an answer from another member of the question answering community. Access to Yahoo Answers is free.

Yahoo Answers appears to be definite extension of what Yahoo's Senior Vice President, Search and Marketplace, Jeff Weiner, calls FUSE (Find, Use, Share, Expand) and Yahoo's numerous efforts into online community building with services like Web 2. 0.

At the moment Yahoo Answers offers 23 top-level categories like:

Yahoo Answers uses a point and level system to reward participants:

Looking at the Yahoo Answers point system, it appears to me that there is an incentive to answer as many questions as possible as quickly as possible without worrying about accuracy. I think that's going to need some tuning.

Both a simple search box and some advanced features are available for asked and answered questions. Yahoo Answers will be promoted on Yahoo Web Results pages. For example, a user might see a link to seek an answer to their info need on Yahoo Answers.

Yahoo! Search blog: Asking the Internet

http://www. ysearchblog. com/archives/000221. html

Sean O'Hagan made an interesting proposal. To spice up Yahoo Answers a little, more specific categories might be of great advantage for users to join and help each other!

I believe Yahoo! will prove itself in the coming times with Yahoo Answers

ResearchBuzz: Yahoo Launches Yahoo Answers

http://www. researchbuzz. org/2006/01/yahoo_launches_yahoo_answers. shtml

I drilled down the category listing to Science & Math / Zoology. Yahoo Answers divides the questions into unanswered (the most recently-asked questions are listed first) and answered.

Smart Mobs: Yahoo Answers:

http://www. smartmobs. com/archive/2006/01/11/yahoo_answers. html

Luke Biewald sent me some commentary on the new Yahoo Answers service launched in December:

You have to see these to believe them. So what is the problem with Yahoo Answers? The implementation seems to be working very hard to reduce questions like this. While they have built in a point system, it doesn't seem to have an effect in bubbling up good questions or answers.

Listed below are links to weblogs that reference Yahoo Answers:

Just caught this on Smart Mobs, an answer from Luke Biewald (really a Yahoo employee?) about the Yahoo Answers service:"This idea is really obvious, but has the potential to be as transformative as the Wikipedia.

What do people say about Yahoo answers and Wondir?

IP Democracy

http://www. ipdemocracy. com/archives/2005/12/08/index. php

Turns out Revolution Health Group is controlled by Steve Case, whose assortment of recent investments are reviewed in this IPD post. When Revolution’s acquisition of Wondir was announced a few months ago, it was presented as one of multiple investments closely tied the healthcare industry. But the launch of Yahoo Answers (and Price’s post), serves as a reminder that Wondir has broader capabilities and potential applications. It makes one wonder if Case has bigger plans for Wondir.

Yahoo! Answers Relies on the Kindness—and Knowledgeability—of. . . : http://www. infotoday. com/newsbreaks/nb051219-1. shtml

Other companies in the answer business have also moved in this direction. Answers. com recently acquired a search engine technology company called Brainboost that uses natural language processing to outline and analyze search results for context. In May, I reported on another collaborative answer service called Wondir, developed by information industry stalwart Matt Koll (“Wondir Launches Volunteer Virtual Reference Service,” http://www. infotoday. com/newsbreaks/nb050502-1. shtml

).

Mayer indicated that Yahoo! had spoken with Koll and looked at Wondir but ultimately decided that the number of users Yahoo!Answers would draw from the mammoth Yahoo! userbase would make it more successful than Wondir.

Hello World: Yahoo Answers

http://yanivg. blogspot. com/2005/12/yahoo-answers. html

Yahoo Answers is not the first attempt at this. In fact, Susan Mernit referred to it as a YAAN - Yet Another Answer Network, comparing it to Wondir and to others. While it's true that Yahoo Answers is not much different then Wondir, which has been around for quite some time, the user experience in the Yahoo implementation is considerably more slick, and is likely to get better and better. Not to mention the fact that, not really surprisingly, Yahoo Answer got on its first day traffic similar to what Wondir is seeing after ~3 years of operation.

Like Wondir, Yahoo Answers suffers from the "initial impression" effect (we call this "The Harry Potter Effect" - whenever a new Harry Potter book is released, Wondir is swamped with Potter-related questions. . . ) - when a user enters the system and is exposed to the "most recent" questions list, the content of these questions determines the flavor of the service in the eyes of the user. And given the random nature of this most recent list, and the topics which people are most interested at, that initial impression may cause users who are valuable knowledge sources to click-back-away. Again, if leveraging the long tail of knowledge is a goal of the service, thought should be given to this topic as well.

TechCrunch " Yahoo Answers Launches

http://www. techcrunch. com/2005/12/08/yahoo-answers-launches

My understanding is that the service will be somewhat similar to Yahoo Answers, Wondir, Google Answers and Oyogi, with some key differences that the founders hope will result in significantly more user participation, and better answers.

Did Yahoo Answers Beta take the idea "to get answers from real people" from Wondir

(http://www. wondir. com/)?

Then I collected some excerpts with QTsearch in order to understand what Yahoo Answers is all about; and then I checked whether other people commented about the relationship between Yahoo Answers and Wondir .

What is Yahoo Answers all about?

The Birth of Yahoo Answers

http://blog. searchenginewatch. com/blog/051207-220118

Now out in beta is Yahoo Answers, Yahoo's new social networking/online community/question answering service. The service allows any registered Yahoo user to ask just about any question and hopefully get an answer from another member of the question answering community. Access to Yahoo Answers is free.

Yahoo Answers appears to be definite extension of what Yahoo's Senior Vice President, Search and Marketplace, Jeff Weiner, calls FUSE (Find, Use, Share, Expand) and Yahoo's numerous efforts into online community building with services like Web 2. 0.

At the moment Yahoo Answers offers 23 top-level categories like:

Yahoo Answers uses a point and level system to reward participants:

Looking at the Yahoo Answers point system, it appears to me that there is an incentive to answer as many questions as possible as quickly as possible without worrying about accuracy. I think that's going to need some tuning.

Both a simple search box and some advanced features are available for asked and answered questions. Yahoo Answers will be promoted on Yahoo Web Results pages. For example, a user might see a link to seek an answer to their info need on Yahoo Answers.

Yahoo! Search blog: Asking the Internet

http://www. ysearchblog. com/archives/000221. html

Sean O'Hagan made an interesting proposal. To spice up Yahoo Answers a little, more specific categories might be of great advantage for users to join and help each other!

I believe Yahoo! will prove itself in the coming times with Yahoo Answers

ResearchBuzz: Yahoo Launches Yahoo Answers

http://www. researchbuzz. org/2006/01/yahoo_launches_yahoo_answers. shtml

I drilled down the category listing to Science & Math / Zoology. Yahoo Answers divides the questions into unanswered (the most recently-asked questions are listed first) and answered.

Smart Mobs: Yahoo Answers:

http://www. smartmobs. com/archive/2006/01/11/yahoo_answers. html

Luke Biewald sent me some commentary on the new Yahoo Answers service launched in December:

You have to see these to believe them. So what is the problem with Yahoo Answers? The implementation seems to be working very hard to reduce questions like this. While they have built in a point system, it doesn't seem to have an effect in bubbling up good questions or answers.

Listed below are links to weblogs that reference Yahoo Answers:

Just caught this on Smart Mobs, an answer from Luke Biewald (really a Yahoo employee?) about the Yahoo Answers service:"This idea is really obvious, but has the potential to be as transformative as the Wikipedia.

What do people say about Yahoo answers and Wondir?

IP Democracy

http://www. ipdemocracy. com/archives/2005/12/08/index. php

Turns out Revolution Health Group is controlled by Steve Case, whose assortment of recent investments are reviewed in this IPD post. When Revolution’s acquisition of Wondir was announced a few months ago, it was presented as one of multiple investments closely tied the healthcare industry. But the launch of Yahoo Answers (and Price’s post), serves as a reminder that Wondir has broader capabilities and potential applications. It makes one wonder if Case has bigger plans for Wondir.

Yahoo! Answers Relies on the Kindness—and Knowledgeability—of. . . : http://www. infotoday. com/newsbreaks/nb051219-1. shtml

Other companies in the answer business have also moved in this direction. Answers. com recently acquired a search engine technology company called Brainboost that uses natural language processing to outline and analyze search results for context. In May, I reported on another collaborative answer service called Wondir, developed by information industry stalwart Matt Koll (“Wondir Launches Volunteer Virtual Reference Service,” http://www. infotoday. com/newsbreaks/nb050502-1. shtml

).

Mayer indicated that Yahoo! had spoken with Koll and looked at Wondir but ultimately decided that the number of users Yahoo!Answers would draw from the mammoth Yahoo! userbase would make it more successful than Wondir.

Hello World: Yahoo Answers

http://yanivg. blogspot. com/2005/12/yahoo-answers. html

Yahoo Answers is not the first attempt at this. In fact, Susan Mernit referred to it as a YAAN - Yet Another Answer Network, comparing it to Wondir and to others. While it's true that Yahoo Answers is not much different then Wondir, which has been around for quite some time, the user experience in the Yahoo implementation is considerably more slick, and is likely to get better and better. Not to mention the fact that, not really surprisingly, Yahoo Answer got on its first day traffic similar to what Wondir is seeing after ~3 years of operation.

Like Wondir, Yahoo Answers suffers from the "initial impression" effect (we call this "The Harry Potter Effect" - whenever a new Harry Potter book is released, Wondir is swamped with Potter-related questions. . . ) - when a user enters the system and is exposed to the "most recent" questions list, the content of these questions determines the flavor of the service in the eyes of the user. And given the random nature of this most recent list, and the topics which people are most interested at, that initial impression may cause users who are valuable knowledge sources to click-back-away. Again, if leveraging the long tail of knowledge is a goal of the service, thought should be given to this topic as well.

TechCrunch " Yahoo Answers Launches

http://www. techcrunch. com/2005/12/08/yahoo-answers-launches

My understanding is that the service will be somewhat similar to Yahoo Answers, Wondir, Google Answers and Oyogi, with some key differences that the founders hope will result in significantly more user participation, and better answers.

Friday, January 27, 2006

The Hypocrisy of the Web

Roger Landry writes on his Blog about "Ranking in Google the Secret's Out". This is one of the best examples I ever saw for the habits people are forced to adopt in order to survive: millions of Web users are writing not in order to express themselves or truly share information with others but in order to add fresh content to their sites so that there will be enough traffic of visitors to buy whatever they are selling. The macro picture is quite amazing – the Web is getting full with hypocrisy!

I'm not saying that hypocrisy is bad, or that survival is bad –on the contrary, all this shows how vital is the game called competition!

I'm not saying that hypocrisy is bad, or that survival is bad –on the contrary, all this shows how vital is the game called competition!

Thursday, January 26, 2006

Better Answers

Google is so successful that we don't expect it to get better, but there is always room for improvement.

Today I looked for the words "Table of Contents" on QTsearch and got 3 answers – the first was exceptionally good because it described what a "Table of Contents" is and the others were good enough because they brought an example of a "Table of Contents". In Google I got 230,000,000 results and the top 10 were good enough examples of a "Table of Contents". I thought that if search engines will see to it that the first answer for any query will be an answer to the question "what is this" users will feel much more satisfied than in the current situation, in which they get confused by the many examples and don't get the overall picture.

So here are the sources for this recommendation: (you can repeat this little experiment and see for yourselves):

QTsearch:

http://en.wikipedia.org/wiki?title=Table_of_contents

A table of contents is an organized list of titles for quick information on the summary of a book or document and quickly directing the reader to any topic.Usually, printed tables of contents indicate page numbers where each section starts, while online ones offer links to go to each section. In English works the table of contents is at the beginning of a book; in French it is at the back, by the index.

Table of contents for the Pachypodium genus : http://en.wikipedia.org/wiki?title=Table_of_contents_for_the_Pachypodium_genus

Table of Contents for the Pachypodium Genus

Infotrieve Online : http://www.infotrieve.com/journals/toc_main.asp

Table of Contents (TOC) is a database providing the display of tables of contents of journals.The TOC alert service delivers tables of contents to you as they arrive from the publisher based on journal titles you've pre-selected and entered into your TOC profile. Only titles marked with the icon are available for alert and delivery.

Google:

Web Style Guide, 2nd Edition - 12:22am

... by Design book cover Buy Sarah Horton's new book, Access by Design, at Amazon.com • Web site hosted by Pair.com • Print table of contents. Jump to top ...

www.webstyleguide.com/ - 20k - 21 Jan 2006 - Cached - Similar pages - Remove result

Abridged Table of Contents

Abridged Table of Contents ... How to Cite • Editorial Information • About the SEP • Unabridged Table of Contents • Advanced Search • Advanced Tools ...

plato.stanford.edu/contents.html - 101k - Cached - Similar pages - Remove result

Smithsonian Institution

Composed of sixteen museums and galleries, the National Zoo, and numerous research facilities in the United States and abroad.

www.si.edu/ - 59k - 21 Jan 2006 - Cached - Similar pages - Remove result

Today I looked for the words "Table of Contents" on QTsearch and got 3 answers – the first was exceptionally good because it described what a "Table of Contents" is and the others were good enough because they brought an example of a "Table of Contents". In Google I got 230,000,000 results and the top 10 were good enough examples of a "Table of Contents". I thought that if search engines will see to it that the first answer for any query will be an answer to the question "what is this" users will feel much more satisfied than in the current situation, in which they get confused by the many examples and don't get the overall picture.

So here are the sources for this recommendation: (you can repeat this little experiment and see for yourselves):

QTsearch:

http://en.wikipedia.org/wiki?title=Table_of_contents

A table of contents is an organized list of titles for quick information on the summary of a book or document and quickly directing the reader to any topic.Usually, printed tables of contents indicate page numbers where each section starts, while online ones offer links to go to each section. In English works the table of contents is at the beginning of a book; in French it is at the back, by the index.

Table of contents for the Pachypodium genus : http://en.wikipedia.org/wiki?title=Table_of_contents_for_the_Pachypodium_genus

Table of Contents for the Pachypodium Genus

Infotrieve Online : http://www.infotrieve.com/journals/toc_main.asp

Table of Contents (TOC) is a database providing the display of tables of contents of journals.The TOC alert service delivers tables of contents to you as they arrive from the publisher based on journal titles you've pre-selected and entered into your TOC profile. Only titles marked with the icon are available for alert and delivery.

Google:

Web Style Guide, 2nd Edition - 12:22am

... by Design book cover Buy Sarah Horton's new book, Access by Design, at Amazon.com • Web site hosted by Pair.com • Print table of contents. Jump to top ...

www.webstyleguide.com/ - 20k - 21 Jan 2006 - Cached - Similar pages - Remove result

Abridged Table of Contents

Abridged Table of Contents ... How to Cite • Editorial Information • About the SEP • Unabridged Table of Contents • Advanced Search • Advanced Tools ...

plato.stanford.edu/contents.html - 101k - Cached - Similar pages - Remove result

Smithsonian Institution

Composed of sixteen museums and galleries, the National Zoo, and numerous research facilities in the United States and abroad.

www.si.edu/ - 59k - 21 Jan 2006 - Cached - Similar pages - Remove result

Tuesday, January 24, 2006

QTsearch

QTsearch has some features that are different from other current search engines. Some of these features can be utilized by these other search engines in order to help users navigate and find what they need.

1. Micro contents - current search engines supply macro contents which give users more than they can chew, while QTsearch produces relevant micro contents.

2. One results page – QTsearch provides the results themselves on one page instead of providing links to the results and snippets to describe them.

3. Few manageable results – and not daunting numbers like 100000 results and more.

4. No advertisements.

5. Meta search – QTsearch extracts chunks from multiple sources so that users can get answers even if one of the sources doesn't have it.

6. Snippets - Current search engines have old fashioned snippets that confuse users and let them guess what's in the link. QTsearch can make clear transparent snippets (from their first results pages) that help users navigate.

7. Suggestions - Some current search engines don't have suggestions to refine queries while QTsearch users can add the suggestions to their new queries in order to refine them.

8. Best answers - QTsearch algorithm can be implemented on any current search engine and give users the best answers from the first results-pages that are retrieved.

9. Accessibility - current search engines have severe accessibility problems while QTsearch can manipulate their results so that those who have difficulties in reading will hear the information with Opera browser.

10. Mobile - QTsearch can make current search engine results fit to Mobile devices.

11. Sorting – QTsearch lets users process the results on-line so that they can filter manually all the superfluous information and get the cleanest possible answers.

1. Micro contents - current search engines supply macro contents which give users more than they can chew, while QTsearch produces relevant micro contents.

2. One results page – QTsearch provides the results themselves on one page instead of providing links to the results and snippets to describe them.

3. Few manageable results – and not daunting numbers like 100000 results and more.

4. No advertisements.

5. Meta search – QTsearch extracts chunks from multiple sources so that users can get answers even if one of the sources doesn't have it.

6. Snippets - Current search engines have old fashioned snippets that confuse users and let them guess what's in the link. QTsearch can make clear transparent snippets (from their first results pages) that help users navigate.

7. Suggestions - Some current search engines don't have suggestions to refine queries while QTsearch users can add the suggestions to their new queries in order to refine them.

8. Best answers - QTsearch algorithm can be implemented on any current search engine and give users the best answers from the first results-pages that are retrieved.

9. Accessibility - current search engines have severe accessibility problems while QTsearch can manipulate their results so that those who have difficulties in reading will hear the information with Opera browser.

10. Mobile - QTsearch can make current search engine results fit to Mobile devices.

11. Sorting – QTsearch lets users process the results on-line so that they can filter manually all the superfluous information and get the cleanest possible answers.

Monday, January 23, 2006

Google Competitors

Not so long ago, on December 21, 2005, I wrote about the European and the Japanese Google competitors – and now I read on the news about another such group that the Norwegians are organizing. As I already noted I believe no group will succeed to beat Google on its own MACRO-CONTENT game field. Only a group that will develop a new MICRO-CONTENT search engine will have a chance to build a meaninful machine.

Here are some excerpts about these new competitors:

http://www.pandia.com/sew/162-europan-search-engine-alliance-to-challenge-google.html

According to the Norwegian policy newletter Mandag Morgen (Monday Morning) Fast and Schibsted are now building an research alliance with Accenture and several universities in Norway, Ireland and the US, including the Norwegian University of Science and Technology (which gave birth to Fast), the University of Tromsø, the University of Oslo, the Norwegian School of Management, Dublin City University, University College Dublin and Cornell University in the US.

The partners are to invest some NOK 340 million (US$ 51 million) in the new research center Information Access Disruptions (iAd) over an eight year period, Monday Morning reports.

http://www.pandia.com/sew/107-fast-is-back-on-the-web-search-engine-scene.html

One of the reasons for this Norwegian search engine bonanza is that Google has expressed a special interest in this Internet savvy market. These companies would like to present localized search engines for the Norwegian Internet users before google.no becomes something more than a Norwegian language clone of google.com.

http://www.ketupa.net/schibsted.htm - Schibsted group: Overview

The Norwegian Schibsted group is a newspaper and book publisher with online and film interests, competing with Egmont, Sanoma WSOY, Metro and Bonnier.

Schibsted owns Aftenposten, the largest newspaper in Norway, 49.9% of Sweden's leading paper, Aftonbladet and all of Postimees, Estonia's largest daily.The group includes Norwegian tabloid Verdens Gang and papers in Spain and Estonia.

http://en.wikipedia.org/wiki/Schibsted - Schibsted - Wikipedia, the free encyclopedia

Schibsted is one of the leading media groups in Scandinavia.Schibsteds present activities relate to media products and rights in the field of newspapers, television, film, publishing, multimedia and mobile services. Schibsted has ownership in a variety of formats; paper, the Internet, television, cinema, video, DVD and wireless terminals (mobile telephones, PDAs etc.).Schibsteds headquarters are in Oslo. Most of the groups operations are based in Norway and Sweden, but the group has operations in 11 European countries; Spain, France and Switzerland among others.

http://www.ineedhits.com/free-tools/blog/2005/10/australian-scandianvian-partnership.aspx

- ineedhits SEM Blog: Australian-Scandianvian Partnership Takes on ...

Australian directory business Sensis has announced a joint venture agreement with Norwegian search technology company Fast Search & Transfer and Scandinavian media firm Schibsted to market Internet search and advertising capabilities throughout Europe. Sensis used Fast's search technology on its Australian search site sensis.com.au, which had over 1 million unique users in September...The joint venture will aim to sell its capabilities to directories and media company who want to create a search engine of their own which contains both proprietary local content and relevant web results. Sensis and Schibsted themselves are the joint venture's first customers, with others in the pipeline. However, since the big boys of search, especially Google and Yahoo!, are also heavily investing in local search, the joint venture will most likely face stiff competition. The new joint venture aims to employ 50 staff members by the end of the year, even though Sensis has announced that it will lay off up to 250 staff in Australia as part of a strategic realignment. The joint venture's headquarters will be located in London.

Here are some excerpts about these new competitors:

http://www.pandia.com/sew/162-europan-search-engine-alliance-to-challenge-google.html

According to the Norwegian policy newletter Mandag Morgen (Monday Morning) Fast and Schibsted are now building an research alliance with Accenture and several universities in Norway, Ireland and the US, including the Norwegian University of Science and Technology (which gave birth to Fast), the University of Tromsø, the University of Oslo, the Norwegian School of Management, Dublin City University, University College Dublin and Cornell University in the US.

The partners are to invest some NOK 340 million (US$ 51 million) in the new research center Information Access Disruptions (iAd) over an eight year period, Monday Morning reports.

http://www.pandia.com/sew/107-fast-is-back-on-the-web-search-engine-scene.html

One of the reasons for this Norwegian search engine bonanza is that Google has expressed a special interest in this Internet savvy market. These companies would like to present localized search engines for the Norwegian Internet users before google.no becomes something more than a Norwegian language clone of google.com.

http://www.ketupa.net/schibsted.htm - Schibsted group: Overview

The Norwegian Schibsted group is a newspaper and book publisher with online and film interests, competing with Egmont, Sanoma WSOY, Metro and Bonnier.

Schibsted owns Aftenposten, the largest newspaper in Norway, 49.9% of Sweden's leading paper, Aftonbladet and all of Postimees, Estonia's largest daily.The group includes Norwegian tabloid Verdens Gang and papers in Spain and Estonia.

http://en.wikipedia.org/wiki/Schibsted - Schibsted - Wikipedia, the free encyclopedia

Schibsted is one of the leading media groups in Scandinavia.Schibsteds present activities relate to media products and rights in the field of newspapers, television, film, publishing, multimedia and mobile services. Schibsted has ownership in a variety of formats; paper, the Internet, television, cinema, video, DVD and wireless terminals (mobile telephones, PDAs etc.).Schibsteds headquarters are in Oslo. Most of the groups operations are based in Norway and Sweden, but the group has operations in 11 European countries; Spain, France and Switzerland among others.

http://www.ineedhits.com/free-tools/blog/2005/10/australian-scandianvian-partnership.aspx

- ineedhits SEM Blog: Australian-Scandianvian Partnership Takes on ...

Australian directory business Sensis has announced a joint venture agreement with Norwegian search technology company Fast Search & Transfer and Scandinavian media firm Schibsted to market Internet search and advertising capabilities throughout Europe. Sensis used Fast's search technology on its Australian search site sensis.com.au, which had over 1 million unique users in September...The joint venture will aim to sell its capabilities to directories and media company who want to create a search engine of their own which contains both proprietary local content and relevant web results. Sensis and Schibsted themselves are the joint venture's first customers, with others in the pipeline. However, since the big boys of search, especially Google and Yahoo!, are also heavily investing in local search, the joint venture will most likely face stiff competition. The new joint venture aims to employ 50 staff members by the end of the year, even though Sensis has announced that it will lay off up to 250 staff in Australia as part of a strategic realignment. The joint venture's headquarters will be located in London.

Sunday, January 22, 2006

False Positives

1. Spider

Today I searched on Google for the words "spider identification" and got a site with identification of brown spiders .

I meant to search for "search engine spider identification" but I was so sure that "spider" is a computer program that I forgot the fact that this word is a homonym, a word that has multiple meanings. It quite amused me and I thought it would be nice to collect false positives on this web page and to update the page whenever I find new ones. Readers are invited to add False Positives on their comments form.

2. Home

On Wikipedia I found a False Positive right in the definition of the term "False Positive".

3. Pray and 'Bare feet'

Beware of homonyms:

4. Apple

If you enter the word "apple" into Google search looking for the tree or the fruit of that tree you'll have to scan a few hundred results about the company by that name before you find what you asked for.

5. organization and color

Aside from cultural differences there are spelling differences as well. American spellings vary from English; you might be missing your answer by only searching organisation (organization) and color (colour).

6. Polish/polish

Homonyms

7. Police

If you type in police, you get a lot of pages about the rock group.

8. Football

Free text

9. Cobra

10. China

11. Cook

http://www.sciam.com/article.cfm?articleID=00048144-10D2-1C70-84A9809EC588EF21&pageNumber=5&catID=2

An intelligent search program can sift through all the pages of people whose name is "Cook" (sidestepping all the pages relating to cooks, cooking, the Cook Islands and so forth),

12. cat/cats

http://en.wikipedia.org/wiki/Folksonomy

Today I searched on Google for the words "spider identification" and got a site with identification of brown spiders .

I meant to search for "search engine spider identification" but I was so sure that "spider" is a computer program that I forgot the fact that this word is a homonym, a word that has multiple meanings. It quite amused me and I thought it would be nice to collect false positives on this web page and to update the page whenever I find new ones. Readers are invited to add False Positives on their comments form.

2. Home

On Wikipedia I found a False Positive right in the definition of the term "False Positive".

In computer database searching, false positives are documents that are retrieved by a search despite their irrelevance to the search question. False positives are common in full text searching, in which the search algorithm examines all of the text in all of the stored documents in an attempt to match one or more search terms supplied by the user.

Most false positives can be attributed to the deficiencies of natural language, which is often ambiguous: the term "home," for example, may mean "a person's dwelling" or "the main or top-level page in a Web site." The false positive rate can be reduced by using a controlled vocabulary, but this solution is expensive because the vocabulary must be developed by an expert and applied to documents by trained indexers.

3. Pray and 'Bare feet'

Beware of homonyms:

Two words are homonyms if they are pronounced or spelled the same way but have different meanings. A good example is 'pray' and 'prey'. If you look up information on a 'praying mantis', you'll find facts about a religious insect rather than one that seeks out and eats others. 'Bare feet' and 'bear feet' are two very different things! If you use the wrong word to describe your search you will find interesting, but wrong, results.

4. Apple

If you enter the word "apple" into Google search looking for the tree or the fruit of that tree you'll have to scan a few hundred results about the company by that name before you find what you asked for.

5. organization and color

Aside from cultural differences there are spelling differences as well. American spellings vary from English; you might be missing your answer by only searching organisation (organization) and color (colour).

6. Polish/polish

Homonyms

can affect your search: China/china or Polish/polish.

7. Police

If you type in police, you get a lot of pages about the rock group.

8. Football

Free text

searching is likely to retrieve many documents that are not relevant to the search question. Such documents are called false positives. The retrieval of irrelevant documents is often caused by the inherent ambiguity of natural language; for example, in the United States, football refers to what is called American football outside the U.S.; throughout the rest of the world, football refers to what Americans call soccer. A search for football may retrieve documents that are about two completely different sports.

9. Cobra

href="http://www.sims.berkeley.edu/courses/is141/f05/lectures/jpedersen.pdf">Jan Pedersen, Chief Scientist, Yahoo! Search wrote on 19 September 2005 about The Four Dimensions of Search Engine Quality and in the chapter about Handling Ambiguity

brought nine pictures of different things called "cobra" (snake, car, helicopter etc.)

10. China

Quickly finding documents is indeed easy. Finding relevant documents, however, is a challenge that information retrieval (IR) researchers have been addressing for more than 40 years. The numerous ambiguities inherent in natural language make this search problem incredibly difficult. For example, a query about "China" can refer to either a country or dinnerware.

11. Cook

http://www.sciam.com/article.cfm?articleID=00048144-10D2-1C70-84A9809EC588EF21&pageNumber=5&catID=2

An intelligent search program can sift through all the pages of people whose name is "Cook" (sidestepping all the pages relating to cooks, cooking, the Cook Islands and so forth),

12. cat/cats

http://en.wikipedia.org/wiki/Folksonomy

Phenomena that may cause problems include polysemy, words which have multiple related meanings (a window can be a hole or a sheet of glass); synonym, multiple words with the same or similar meanings (tv and television, or Netherlands/Holland/Dutch) and plural words (cat and cats)

Friday, January 20, 2006

Search Engine Spider Identification

On my Site Meter I get mysterious numbers like 64. 233. 173. 85 or like 193. 47. 80.

Theoretically I knew that spiders visit my Blog but I never knew how to trace them. Today I saw an excellent guide by John A Fotheringham with a list of Spider Identifications. I tried to search there for 64.233.173.85 and other such numbers and got no match. Suddenly I had this bright idea to Google "64.233.173.85" and voila – I got it on Search Engine Spider Identification And it’s the Google Spider!

Then I saw on my Site Meter that the spiders' name (Google) is on the ISP field.

So I got some confidence and tried to trace the 193.47.80 spider and voila – on the ISP field I saw that it's Exalead, about which I just posted

Theoretically I knew that spiders visit my Blog but I never knew how to trace them. Today I saw an excellent guide by John A Fotheringham with a list of Spider Identifications. I tried to search there for 64.233.173.85 and other such numbers and got no match. Suddenly I had this bright idea to Google "64.233.173.85" and voila – I got it on Search Engine Spider Identification And it’s the Google Spider!

Then I saw on my Site Meter that the spiders' name (Google) is on the ISP field.

So I got some confidence and tried to trace the 193.47.80 spider and voila – on the ISP field I saw that it's Exalead, about which I just posted

Thursday, January 19, 2006

Exalead

Exalead is a France-based company that is involved in the President Jacques Chirac's initiative to develop Quaero , a European substitute for Google.

From my point of view Exalead is another macro content search engine with old fashioned snippets and I don't see where they take their confidence from when they try to compete with Google which is the best in this macro content field.

Only micro content strategy will help them change the basic rules of the game.

I liked Exalead's wonderful feature of "related terms" that takes you directly to the webpages you need.

I think it’s a big step towards one answer machine.

Here are some excerpts I collected in order to get acquainted with Exalead:

http://www.pandia.com/sew/149-the-multimedia-search-engine-quaero-europe%E2%80%99s-answer-to-google.html

Several companies are involved in the Quaero project along with Thompson. AFP’s article mentions Deutsche Telecom, France Telecom, and the search engine Exalead. This is very promising – Exalead has an interface that makes Google look out of date.

http://www.seroundtable.com/archives/001385.html

January 13, 2005

Exalead Searching 1,031,065,733 Web Pages

A while back, Google surpassed the 8 billion mark, and Gigablast Broke 1k the other day. John Battellle reported; Exalead, a company that powers AOL France's search (I was introduced to its founder by Alta Vista founder Louis Monier - yup, he's French) announced today that its stand alone search engine has surpassed the 1-billion-pages-indexed mark. (The engine launched in October)." Louis Monier was on the panel at SES on the search memories session, you can see his picture there, smart, visionary and funny guy.

Gary Price reports that Exalead's Paris-based CEO, Francois Bourdoncle, said "that the company plans to have a two billion page web index online in the near future. He also said that his company is about just ready to introduce a desktop search tool."

http://www.searchenginejournal.com/index.php?p=1752

Although Exalead’s results are not as relevant as the top search engines, Exalead is doing some interesting things on the presentation side.

Exalead allows users to filter by related search terms, related categories (clustering), web site location (local search), and document type. Users can also restrict results to websites containing links to audio or video content.

All results have a thumbnail of the homepage.

But thats not all Exalead is experimenting with. Clicking on a result loads up the page in a bottom frame with the search terms highlighted on the page. This allows for quick scanning and a fast way to determine if the result is relevant to the initial search. If the page isn’t relevant, users have instant access to the search results.

http://enterprise.amd.com/downloadables/Exalead_ISS_20050713a.pdf

In 2004, the Paris, France-based company wanted to showcase a new international searching service on powerful enterprise-class technology, in the quest to become the No. 1 information-access leader.

Although Exalead started business in 2000, its roots in search engine technology run deeper. Exalead's founders developed the company's dynamic categorization technology while working at the Ecoles des Mines de Paris, one of the top engineering schools in France. Exalead CEO François Bourdoncle and board member Louis Monier were early developers of the AltaVista search engine, and they wanted to build a similar vehicle for the Web.

2. http://searchenginewatch. com/searchday/article. php/3507266 Introducing a New Web Search Contender

These days, web search is dominated by giants, and it's rare to see the emergence of a new potentially world-class search engine. Meet Exalead, a powerful search tool with features not offered by the major search engines.

Exalead is a fairly new search engine from France, introduced in October 2004 and still officially in beta. Having passed the one-billion page mark in 2005, it's still 1/8th the size of Google or Yahoo, but what's a few billion pages among friends? Actually, after a certain point, size really doesn't matter.

The key factors in evaluating a search engine should include timeliness, ability to handle ambiguity, and plenty of power search tools. Exalead does a great job, at least on two of these three criteria.

Exalead is one of the only search engines to allow proximity searching(!), in which the words you search must be within 16 words of each other. (No, you can't tweak the number of intervening words. )

Exalead also lets you use "Regular Expressions," in which you can search for documents with words that match a certain pattern. Imagine, for example, that you're doing a crossword puzzle and have a word of 6 letters, of which the second is T and the sixth is C. By searching /. t. . . c/, you will retrieve sites with the word ATOMIC, perhaps the right word for your puzzle.

3. http://www. pandia. com/sew/110-interesting-exalead-upgrade. html » Interesting Exalead upgrade

The French search engine Exalead has been given fair credit for its advanced search functions, including truncation, proximity search, stemming, phonetic search, language field search. There has now been an interesting upgrade.

Since December 2004 there has been an option letting you add your own links under the search field on the search engine’s home page, giving you the opportunity to turn the Exalead home page into your own portal. Now you may add up till 18 shortcuts of this kind. Hence you may add links to other search engines, making it possible to do your search using other search engines by clicking on the thumbnail appearing under the search form.

4. http://www. erexchange. com/BLOGS/CyberSleuthing?LISTINGID=AFA82E22391841F1B820D26BEB43A351 CyberSleuthing!: Exalead goes full circle | ERE Blog Network

This includes sponsored links by Espotting, and also Exalead Web technology that uses statistical analysis to compare the search terms with the content found in Web pages. When searching for say, "Natacha, hotesse de l'air", the name of a comic strip by Walthיry about an air stewardess, Exalead takes you straight to the site of the artist, grifil. net. The same search with Google gives results overrun with extraneous content.

Exalead the company has been around for 4 years, and the "beta" search was announced so why the sudden news coverage? There is little note worthy news stories about Exalead prior to May 20th of this year.

From my point of view Exalead is another macro content search engine with old fashioned snippets and I don't see where they take their confidence from when they try to compete with Google which is the best in this macro content field.

Only micro content strategy will help them change the basic rules of the game.

I liked Exalead's wonderful feature of "related terms" that takes you directly to the webpages you need.

I think it’s a big step towards one answer machine.

Here are some excerpts I collected in order to get acquainted with Exalead:

http://www.pandia.com/sew/149-the-multimedia-search-engine-quaero-europe%E2%80%99s-answer-to-google.html

Several companies are involved in the Quaero project along with Thompson. AFP’s article mentions Deutsche Telecom, France Telecom, and the search engine Exalead. This is very promising – Exalead has an interface that makes Google look out of date.

http://www.seroundtable.com/archives/001385.html

January 13, 2005

Exalead Searching 1,031,065,733 Web Pages

A while back, Google surpassed the 8 billion mark, and Gigablast Broke 1k the other day. John Battellle reported; Exalead, a company that powers AOL France's search (I was introduced to its founder by Alta Vista founder Louis Monier - yup, he's French) announced today that its stand alone search engine has surpassed the 1-billion-pages-indexed mark. (The engine launched in October)." Louis Monier was on the panel at SES on the search memories session, you can see his picture there, smart, visionary and funny guy.

Gary Price reports that Exalead's Paris-based CEO, Francois Bourdoncle, said "that the company plans to have a two billion page web index online in the near future. He also said that his company is about just ready to introduce a desktop search tool."

http://www.searchenginejournal.com/index.php?p=1752

Although Exalead’s results are not as relevant as the top search engines, Exalead is doing some interesting things on the presentation side.

Exalead allows users to filter by related search terms, related categories (clustering), web site location (local search), and document type. Users can also restrict results to websites containing links to audio or video content.

All results have a thumbnail of the homepage.

But thats not all Exalead is experimenting with. Clicking on a result loads up the page in a bottom frame with the search terms highlighted on the page. This allows for quick scanning and a fast way to determine if the result is relevant to the initial search. If the page isn’t relevant, users have instant access to the search results.

http://enterprise.amd.com/downloadables/Exalead_ISS_20050713a.pdf

In 2004, the Paris, France-based company wanted to showcase a new international searching service on powerful enterprise-class technology, in the quest to become the No. 1 information-access leader.

Although Exalead started business in 2000, its roots in search engine technology run deeper. Exalead's founders developed the company's dynamic categorization technology while working at the Ecoles des Mines de Paris, one of the top engineering schools in France. Exalead CEO François Bourdoncle and board member Louis Monier were early developers of the AltaVista search engine, and they wanted to build a similar vehicle for the Web.

2. http://searchenginewatch. com/searchday/article. php/3507266 Introducing a New Web Search Contender

These days, web search is dominated by giants, and it's rare to see the emergence of a new potentially world-class search engine. Meet Exalead, a powerful search tool with features not offered by the major search engines.

Exalead is a fairly new search engine from France, introduced in October 2004 and still officially in beta. Having passed the one-billion page mark in 2005, it's still 1/8th the size of Google or Yahoo, but what's a few billion pages among friends? Actually, after a certain point, size really doesn't matter.

The key factors in evaluating a search engine should include timeliness, ability to handle ambiguity, and plenty of power search tools. Exalead does a great job, at least on two of these three criteria.

Exalead is one of the only search engines to allow proximity searching(!), in which the words you search must be within 16 words of each other. (No, you can't tweak the number of intervening words. )

Exalead also lets you use "Regular Expressions," in which you can search for documents with words that match a certain pattern. Imagine, for example, that you're doing a crossword puzzle and have a word of 6 letters, of which the second is T and the sixth is C. By searching /. t. . . c/, you will retrieve sites with the word ATOMIC, perhaps the right word for your puzzle.

3. http://www. pandia. com/sew/110-interesting-exalead-upgrade. html » Interesting Exalead upgrade

The French search engine Exalead has been given fair credit for its advanced search functions, including truncation, proximity search, stemming, phonetic search, language field search. There has now been an interesting upgrade.

Since December 2004 there has been an option letting you add your own links under the search field on the search engine’s home page, giving you the opportunity to turn the Exalead home page into your own portal. Now you may add up till 18 shortcuts of this kind. Hence you may add links to other search engines, making it possible to do your search using other search engines by clicking on the thumbnail appearing under the search form.

4. http://www. erexchange. com/BLOGS/CyberSleuthing?LISTINGID=AFA82E22391841F1B820D26BEB43A351 CyberSleuthing!: Exalead goes full circle | ERE Blog Network

This includes sponsored links by Espotting, and also Exalead Web technology that uses statistical analysis to compare the search terms with the content found in Web pages. When searching for say, "Natacha, hotesse de l'air", the name of a comic strip by Walthיry about an air stewardess, Exalead takes you straight to the site of the artist, grifil. net. The same search with Google gives results overrun with extraneous content.

Exalead the company has been around for 4 years, and the "beta" search was announced so why the sudden news coverage? There is little note worthy news stories about Exalead prior to May 20th of this year.

Wednesday, January 18, 2006

Cow9

An infobroker who reviewed QT/Search told me that the suggestions for further search are similar to Cow9. A quick search on QT/Search for the word Cow9 taught me that this feature disappeared from Alta Vista. I'm not a journalist so I didn't dig into this story, but I feel it in my bones that there is something juicy to discover here about the reasons why such a wonderful feature disappeared just like that. Another interesting angle to explore is how the writer who wrote a whole guide to Cow9 feels when he sees the empty space that replaced the cow9 refine button.

So here's what I collected about cow9:

1. http://www. samizdat. com/script/lt1. htm AltaVista's LiveTopics, AKA Refine, code-named Cow9

Cow9 is a powerful feature that was once offered through AltaVista, first under the name LiveTopics, later called Refine. The project, code named "Cow9," was a collaborative effort between researchers at Digital Equipment Corporation and François Bourdoncle of Ecole des Mines de Paris, www. ensmp. fr The underlying technology has enormous potential. To get a sense for how it can be used, check these slides and the screen-capture examples. (FYI -- the screen captures were made in October 1997).

2. http://www.wadsworth. com/english_d/templates/resources/0838408265_harbrace/research/research12.html Student Resources

Use the "Cow9" function to refine, or narrow, your search by selecting the "Refine" button located to the right of the query field. By doing so, the "Refine's List View" will display the results in the order of relevance so that you can require or exclude topics using a drop-down menu.

3. http://tsc.k12.in.us/training/SEARCH/ALTAVIST/HELP.HTM

LiveTopics is a tool that helps you refine and analyze the results of an AltaVista search.

LiveTopics analyzes the contents of documents that meet your original search criteria and displays groups of additional words, called topics, to use in refining your query. Topics are dynamically generated from words that occur frequently in the documents that match your initial search criteria. Topics appear in order of relevance, and words inside a topic are ordered by frequency of occurrence.

This dynamic generation of related topics, tailored to each search, distinguishes LiveTopics from other web search aids, which offer predetermined categories or structures into which you must fit your query.

The LiveTopics technology was developed for AltaVista by Francois Bourdoncle of the Ecole des Mines de Paris/ARMINES.

4. http://www. buffalo.edu/reporter/vol28/vol28n23/eh.html

Mar. 6, 1997-Vol28n23: Electronic Highways: LiveTopics from AltaVista

AltaVista now offers LiveTopics to assist you in refining the results of your initial search. This new tool offers related terms or topics, generated from your initial search for you to select, or exclude, to help you narrow your search. The terms are produced by frequency in which the word appears in the set of documents.

Choosing LiveTopics offers terms such as qualifications, racial, candidates, tenure, hiring, diversity, women, and recruitment. In addition you may choose subcategories such as salary, position, experience, and minorities. You may then choose to include or omit certain terms from your initial search, reducing the number of documents originally found.

LiveTopics is most useful when you receive more than 200 documents in your initial search. Anything smaller usually results in irrelevant related terms.

5. http://www.ariadne.ac.uk/issue9/search-engines

If you perform a search for 'Ariadne' on Alta Vista you retrieve around 9000 documents. None of our first 10 hits appear to be relevant to our search for the journal as they are links to software companies of the same name. With a set of results of this size, Alta Vista automatically prompts you to refine your search using LiveTopics.LiveTopics brings up a number of topic headings - including mythology, Amiga, Goddess, OPACS, UKOLN and Libraries. We can now exclude all of the irrelevant documents from our search by clicking on the irrelevant subjects to place a cross in the boxes next to them.

This brings my search results down from 9000 documents to around 200. I can then go back and further refine my search if necessary.Alta Vista prompts me to use LiveTopics to summarise my results: if I choose this option it will re-define the categories of topics in my search results and present me with a new set of options based on these dynamic categories.

6. http://www.faculty.de.gcsu.edu/~hpowers/search/altavist.htm

Alta Vista's REFINE feature provides an online thesaurus based on the terms in each of your search results.The terms it offers are based on a statistical analysis of the frequency of appearance of words in the Web pages your search retrieved (they are not drawn from some standardized thesaurus or Websters). They are ranked with most frequent first.

You can modify your search by excluding or adding terms from the groups offered by the thesaurus .

So here's what I collected about cow9:

1. http://www. samizdat. com/script/lt1. htm AltaVista's LiveTopics, AKA Refine, code-named Cow9

Cow9 is a powerful feature that was once offered through AltaVista, first under the name LiveTopics, later called Refine. The project, code named "Cow9," was a collaborative effort between researchers at Digital Equipment Corporation and François Bourdoncle of Ecole des Mines de Paris, www. ensmp. fr The underlying technology has enormous potential. To get a sense for how it can be used, check these slides and the screen-capture examples. (FYI -- the screen captures were made in October 1997).

2. http://www.wadsworth. com/english_d/templates/resources/0838408265_harbrace/research/research12.html Student Resources

Use the "Cow9" function to refine, or narrow, your search by selecting the "Refine" button located to the right of the query field. By doing so, the "Refine's List View" will display the results in the order of relevance so that you can require or exclude topics using a drop-down menu.

3. http://tsc.k12.in.us/training/SEARCH/ALTAVIST/HELP.HTM

LiveTopics is a tool that helps you refine and analyze the results of an AltaVista search.

LiveTopics analyzes the contents of documents that meet your original search criteria and displays groups of additional words, called topics, to use in refining your query. Topics are dynamically generated from words that occur frequently in the documents that match your initial search criteria. Topics appear in order of relevance, and words inside a topic are ordered by frequency of occurrence.

This dynamic generation of related topics, tailored to each search, distinguishes LiveTopics from other web search aids, which offer predetermined categories or structures into which you must fit your query.

The LiveTopics technology was developed for AltaVista by Francois Bourdoncle of the Ecole des Mines de Paris/ARMINES.

4. http://www. buffalo.edu/reporter/vol28/vol28n23/eh.html

Mar. 6, 1997-Vol28n23: Electronic Highways: LiveTopics from AltaVista

AltaVista now offers LiveTopics to assist you in refining the results of your initial search. This new tool offers related terms or topics, generated from your initial search for you to select, or exclude, to help you narrow your search. The terms are produced by frequency in which the word appears in the set of documents.

Choosing LiveTopics offers terms such as qualifications, racial, candidates, tenure, hiring, diversity, women, and recruitment. In addition you may choose subcategories such as salary, position, experience, and minorities. You may then choose to include or omit certain terms from your initial search, reducing the number of documents originally found.

LiveTopics is most useful when you receive more than 200 documents in your initial search. Anything smaller usually results in irrelevant related terms.

5. http://www.ariadne.ac.uk/issue9/search-engines

If you perform a search for 'Ariadne' on Alta Vista you retrieve around 9000 documents. None of our first 10 hits appear to be relevant to our search for the journal as they are links to software companies of the same name. With a set of results of this size, Alta Vista automatically prompts you to refine your search using LiveTopics.LiveTopics brings up a number of topic headings - including mythology, Amiga, Goddess, OPACS, UKOLN and Libraries. We can now exclude all of the irrelevant documents from our search by clicking on the irrelevant subjects to place a cross in the boxes next to them.

This brings my search results down from 9000 documents to around 200. I can then go back and further refine my search if necessary.Alta Vista prompts me to use LiveTopics to summarise my results: if I choose this option it will re-define the categories of topics in my search results and present me with a new set of options based on these dynamic categories.

6. http://www.faculty.de.gcsu.edu/~hpowers/search/altavist.htm

Alta Vista's REFINE feature provides an online thesaurus based on the terms in each of your search results.The terms it offers are based on a statistical analysis of the frequency of appearance of words in the Web pages your search retrieved (they are not drawn from some standardized thesaurus or Websters). They are ranked with most frequent first.

You can modify your search by excluding or adding terms from the groups offered by the thesaurus .

Tuesday, January 17, 2006

World Brain

1. about 70 years ago H.G. Wells in his book World Brain (1938)

In those days nobody could have taken him seriously but the late advancements in search engines technology raise afresh the question of the feasibility of this vision.

2. Fifty years ago Eugene Garfield invented "citation analysis" (1955) as a step in the fulfillment of H.G. Wells' World Brain vision and commented (1981) that

3. Five years ago Google founders, Sergey Brin and Lawrence Page, wrote a paper about the need to make Google. They cited Garfield's work . It seems that Google (with other search engines) is another step in the fulfillment of this vision. Eric Magnuson ,for example, gave one of his Blog postings the following title: Google creating the world brain.

4. Nowadays the Web 2.0 microcontent revolution seems to be another step in the fulfillment of this World Brain vision. Gooogle users get macro-content pages (very quickly) with much more information than they need. Microcontent search engines are going to supply the exact amount of needed information and to organize it much more efficiently. H.G. Wells vision was to build a good world brain, not a jabberer.

envisioned a 'World Encyclopedia' in which multidisciplinary research information of a global nature would be gathered together and made available for the immediate use of anyone in the world.

In those days nobody could have taken him seriously but the late advancements in search engines technology raise afresh the question of the feasibility of this vision.

2. Fifty years ago Eugene Garfield invented "citation analysis" (1955) as a step in the fulfillment of H.G. Wells' World Brain vision and commented (1981) that

computer technology of our own day is beginning to make such a concept feasible.

3. Five years ago Google founders, Sergey Brin and Lawrence Page, wrote a paper about the need to make Google. They cited Garfield's work . It seems that Google (with other search engines) is another step in the fulfillment of this vision. Eric Magnuson ,for example, gave one of his Blog postings the following title: Google creating the world brain.

4. Nowadays the Web 2.0 microcontent revolution seems to be another step in the fulfillment of this World Brain vision. Gooogle users get macro-content pages (very quickly) with much more information than they need. Microcontent search engines are going to supply the exact amount of needed information and to organize it much more efficiently. H.G. Wells vision was to build a good world brain, not a jabberer.

Monday, January 16, 2006

PageRank

Today I stumbled upon Prase, a new Page Ranking search engine, and started experimenting with it. Soon I discovered that I need to know the basics of Page Ranking in order to understand how to make the most out of it - so I entered the word PageRank into QT/Search to collect some chunks:

About Prase

http://www. hawaiistreets. com/seoblog/seoblog. php?itemid=547

Prase stands for PageRank Assisted Search Engine. As of today, I think Prase could be the best link-building tool there is. First of all, it's free and second of all, it lacks the spammy downloads other companies offer you. I've been playing around with Prase for a few minutes this morning and am already taken in by features it has to offer.

1. Search multiple databases by keywords Prase gives you the ability to select from Google and/or Yahoo! and/or MSN as database providers. Simply enter a keyword you associate with a perfect link partner and Prase will return you the results.

2. Sort by PageRank Before you begin a search, you have an option of choosing a PageRank range. This is especially useful if you are trying to either gain backlinks from a high PR site or bypass those sites all together.

When you search, results are sorted for you from highest PR to the lowest.

Start the search anywhere you want You can tell Prase where to begin displaying search results. A useful feature again if you are trying to avoid top sites and target medium or lower end sites. Prase is going on to my del. icio. us list for great service and usability. As an SEO, I am very impressed with service it offers putting to shame companies that try to sell you similar software.

http://www. geekvillage. com/forums/showthread. php?s=e542a4abe1c1e7b25a40743f60c6373d&postid=163100#post163100

There have been other Page Rank search engines, but this one is a bit different in the way it does things.

This search engine would mainly be of interest to people who want to see sites listed according to PageRank. That would include people interested in exchanging links with sites based on their PageRank.

Great they have a higher PageRank, but you are higher in the search results. Since PRASE has results listed by Page Rank, the best site for the searched term isn't always shown at or near the top. That isn't to imply that what is always shown first in the search results is always the best when they aren't arrange by PageRank. With PRASE it is easy to see that the sites with the highest PageRank often aren't the ones that are first in the natural results. The results are sorted three times by PRASE First they are sorted by search engine. Then they are sorted according to PageRank. Then they are finalized by listing them in decending order based on their search engine ranking.

If there is a tie in search engine ranking, the listing is done based upon search engine market share with Google first, Yahoo second and then MSN. The main purpose of PRASE is to search based upon PageRank and to easily show that. It has an added feature that allows you to search for sites within a certain range. The default is between 0 and 9.

About PageRank

1. http://en. wikipedia. org/wiki/PageRank

PageRank, sometimes abbreviated to PR, is a family of algorithms for assigning numerical weightings to hyperlinked documents (or web pages) indexed by a search engine originally developed by Larry Page (thus the play on the words PageRank). Its properties are much discussed by search engine optimization (SEO) experts. The PageRank system is used by the popular search engine Google to help determine a page's relevance or importance. It was developed by Google's founders Larry Page and Sergey Brin while at Stanford University in 1998.

PageRank relies on the uniquely democratic nature of the web by using its vast link structure as an indicator of an individual page's value. Google interprets a link from page A to page B as a vote, by page A, for page B. But Google looks at more than the sheer volume of votes, or links a page receives; it also analyzes the page that casts the vote.

A hyperlink to a page counts as a vote of support. The PageRank of a page is defined recursively and depends on the number and PageRank metric of all pages that link to it ("incoming links"). A page that is linked by many pages with high rank receives a high rank itself. If there are no links to a web page there is no support of this specific page.

http://en.wikipedia.org/wiki/Google_search

Google uses an algorithm called PageRank to rank web pages that match a given search string. The PageRank algorithm computes a recursive figure of merit for web pages, based on the weighted sum of the PageRanks of the pages linking to them. The PageRank thus derives from human-generated links, and correlates well with human concepts of importance. Previous keyword-based methods of ranking search results, used by many search engines that were once more popular than Google, would rank pages by how often the search terms occurred in the page, or how strongly associated the search terms were within each resulting page. In addition to PageRank, Google also uses other secret criteria for determining the ranking of pages on result lists.

Search engine optimization encompasses both "on page" factors (like body copy, title tags, H1 heading tags and image alt attributes) and "off page" factors (like anchor text and PageRank). The general idea is to affect Google's relevance algorithm by incorporating the keywords being targeted in various places "on page," in particular the title tag and the body copy (note: the higher up in the page, the better its keyword prominence and thus the ranking). Too many occurrences of the keyword, however, cause the page to look suspect to Google's spam checking algorithms.

http://en.wikipedia.org/wiki/Search_engine

PageRank is based on citation analysis that was developed in the 1950s by Eugene Garfield at the University of Pennsylvania. Google's founders cite Garfield's work in their original paper. In this way virtual communities of webpages are found.

http://www-db.stanford.edu/~backrub/google.html

The Anatomy of a Large-Scale Hypertextual Web Search Engine

Sergey Brin and Lawrence Page

sergey, page}@cs.stanford.edu

Computer Science Department, Stanford University, Stanford, CA 94305

http://www.iprcom.com/papers/pagerank/

PageRank is also displayed on the toolbar of your browser if you’ve installed the Google toolbar (http://toolbar. google. com/).

PageRank says nothing about the content or size of a page, the language it’s written in, or the text used in the anchor of a link!

The PageRank displayed in the Google toolbar in your browser. This ranges from 0 to 10.